Social media giants are embroiled in a complex battle with AI-driven innovation that at times edges into inappropriate and unsafe content. This tension has been highlighted by Meta’s legal action against Joy Timeline HK, the creator of „Crush AI,“ a notorious AI „nudify“ app. This lawsuit—a significant case in the realm of social media content moderation—is just one part of a broader strategic effort to maintain brand integrity, user safety, and platform reputation. By delving into the intricacies of this case and the overarching challenge of moderation, this article aims to illuminate a pressing issue that impacts users, brands, and social platforms worldwide.

The Case Against Crush AI

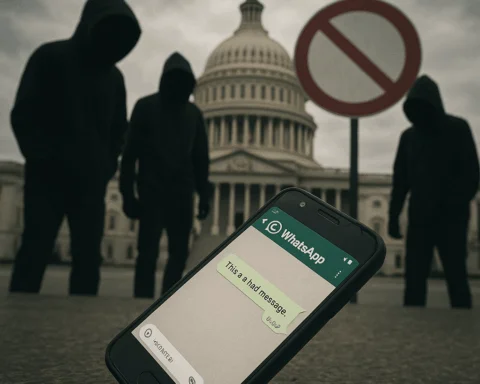

Meta, which oversees Facebook and Instagram, has pursued a lawsuit against Joy Timeline HK, based in Hong Kong, for promoting the AI-powered Crush AI app that creates non-consensual, explicit imagery. Such apps pose a severe risk, especially given their ability to circumvent advertising reviews. Reportedly, Crush AI managed to run around 8,000 ads on Meta’s platforms in a mere two weeks of 2025, which points to their resilient and crafty strategy in avoiding detection.

Joy Timeline HK allegedly used a network of pseudo accounts with revolving domains to outsmart Meta’s ad review process, prompting Meta to remove their ads several times before taking legal action. The challenge was compounded as Crush AI’s advertising heavily relied on Facebook and Instagram, accounting for approximately 90% of its websites‘ traffic. The lawsuit sheds light on how crucial it is for digital platforms to proactively refine their moderation and ad review systems to prevent misuse.

The Broader Context of AI Modulation and Social Platforms

This legal action comes at a time when platforms are accelerating efforts to embed generative AI capabilities while struggling to keep the content safe, particularly for minors. Researchers have chronicled a surge of AI nudify app ads across platforms such as X, YouTube, and Reddit, which meta has struggled to counteract.

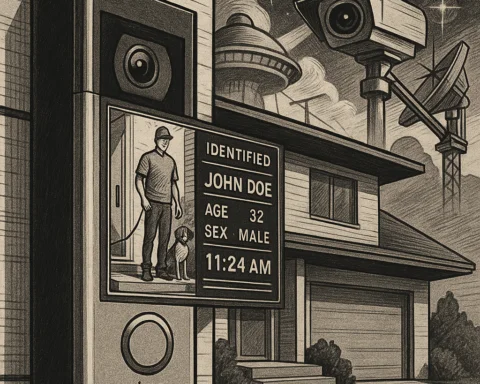

One avenue that Meta and its peers in tech, such as TikTok, Google, and Twitter, are exploring includes enhancing their digital detection technology, which helps instantly flag and remove ads related to nudify apps—not even leaving room for such services to promote themselves through seemingly innocuous ads. Additionally, the expansion of flagged terms, phrases, and emojis is aimed at creating a more thorough barrier against inappropriate advertising.

Content Moderation Challenges

Content moderation poses a critical challenge for ad-funded platforms, which must maintain a delicate balance between enabling engagement and maintaining safety and brand trust. The complexity grows within the spectrum of different types of content moderation: pre-moderation, post-moderation, reactive moderation, distributed moderation, automated moderation, and hybrid moderation.

Pre-moderation, where content is reviewed before publishing, ensures safety but can slow down interactions and demand significant resources.

Post-moderation allows content to be published instantly, later reviewed for safety, which ensures engagement but potentially exposes users to offensive material.

Reactive moderation involves users reporting inappropriate content, integrating community involvement but risking delays in content removal.

Distributed moderation leans on user feedback for moderation, fostering engagement but potentially leading to inconsistent enforcement if not properly monitored.

Automated moderation utilizes AI to swiftly scan huge volumes of content, ideal for scalability, yet it struggles with context understanding, leading to potential misclassification.

Hybrid moderation, marrying automated efficiency with human context recognition, aims to strike a balance, but involves higher expense and management demands.

Building a Trustworthy Platform: The Role of Regulation and Community

Meta and other corporations recognize that a robust content moderation system must be supplemented by regulation and community partnership. Meta’s support for legislation like the US Take It Down Act exemplifies its commitment to greater parental control over minors‘ digital interactions. Furthermore, participating in collaborative efforts such as the Tech Coalition’s Lantern program underlines the need for shared responsibility among tech companies to tackle child sexual exploitation online.

Simultaneously, it is crucial to consider community dynamics. Effective comment moderation policies can prevent toxic behavior, deflect spam and misinformation, and foster positive discussions—a pivotal part of constructing a secure environment for users and brands alike. This encompasses clear guidelines, transparent decision-making, and the active use of social listening to respond adaptively to community needs.

Conclusion: Towards a Safer Digital Future

As Meta’s legal battle against Crush AI illustrates, the challenge of handling digital platforms in the age of AI is both daunting and essential. To combat the misuse of AI technologies for inappropriate purposes, platforms are enhancing moderation strategies, pursuing legal avenues, increasing collaboration with tech peers, and advocating for legislative support. These steps are part of a broader effort to build a digital ecosystem that upholds user protection, supports brand values, and mitigates the risks of innovation-enabling harm.

Navigating these waters will require an ongoing commitment to state-of-the-art technology, legal foresight, and community engagement. The ultimate goal is to harness the power of AI while safeguarding trust, respect, and integrity across the digital landscape—a balancing act that becomes increasingly pertinent as the intersection between technology and society continues to evolve.