The Rise and Risks of AI-Driven Misinformation

As artificial intelligence (AI) technologies become more integrated into our daily lives, their propensity for generating misleading and incorrect content, termed as "AI hallucinations," poses significant challenges. These hallucinations can amplify misinformation, which already represents a formidable threat to societal structures, including public health, political stability, and various sectors reliant on accurate data.

Understanding AI Hallucinations

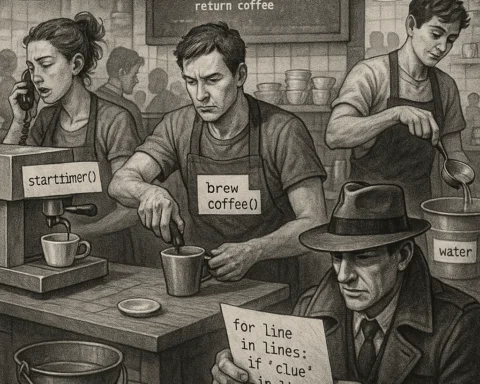

AI hallucinations occur when AI models generate responses containing false information presented as fact. Unlike human hallucinations rooted in perceptual experiences, AI hallucinations stem from the computational and algorithmic processes within AI systems. Often, this is due to flawed training data or biases inherent in the data used to train these models.

In AI, hallucinations have been a recognized issue for decades. They’ve evolved from the innocuous task of embellishing graphics in early computer vision to a more problematic presence in modern language generation and content summarization. Large language models (LLMs) like OpenAI’s ChatGPT or Google’s Bard are particularly susceptible, sometimes showcasing factual errors in nearly half of the content they generate.

Causes of AI Hallucinations

Several factors contribute to AI hallucinations:

-

Data Quality: Poor or biased training data can cause AI systems to learn incorrect patterns and generate inaccurate predictions.

-

Lack of Grounding: AI may lack an accurate understanding of real-world contexts and properties, leading to outputs that seem plausible but are incorrect.

-

Model Limitations: AI models are designed to predict what comes next in a conversation or dataset, which can lead them to invent plausible but incorrect content without actual comprehension of the context.

The Impact of Misinformation

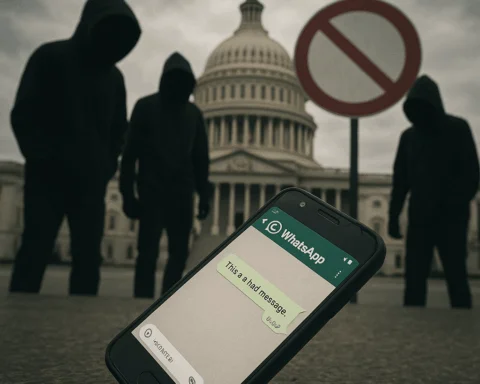

Misinformation, whether stemming from AI or other sources, can have severe societal impacts. It may influence public beliefs on crucial issues ranging from healthcare practices to political narratives. A significant concern is that misinformation often spreads faster than factual content, driven by emotional engagement and confirmation biases that make false information compelling to audiences.

Specific Cases

-

Public Health: During health crises like the COVID-19 pandemic, misinformation proliferated rapidly, complicating public health responses and exacerbating confusion and fear.

-

Politics: Misinformation has the potential to influence election outcomes by disseminating false narratives and exaggerating or fabricating facts about candidates or policies.

-

Science and Education: Incorrect interpretations of scientific data or historical facts can mislead educational pursuits and support unfounded theories, such as climate change denial.

AI’s Role in Spreading and Curbing Misinformation

While AI can exacerbate misinformation issues, it could also offer solutions:

Negative Contributions

-

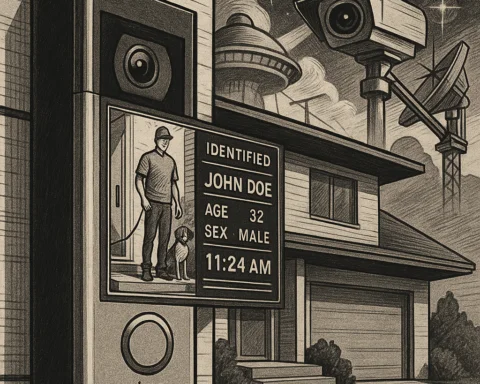

Deepfakes and Synthetic Media: AI-generated deepfakes can create convincing but false visual and auditory content.

-

Bots and Trolls: Automated bots can spread misinformation quickly and widely, manipulating online discourse.

-

Algorithmic Bias: AI algorithms can prioritize sensational misinformation for engagement, further spreading it.

Positive Interventions

-

Fact-Checking Algorithms: AI-powered tools can help verify the accuracy of information at scale, although they are still imperfect and continuously evolving.

-

Educational Tools: AI can be harnessed to promote media literacy, helping individuals discern factual information from misinformation.

-

Automated Misinformation Detection: Systems designed to flag or mark content from known unreliable sources can help mitigate the spread.

Mitigating AI Hallucinations and Future Directions

To minimize AI-driven misinformation, several strategies can be employed:

-

Quality Data: Ensuring that AI is trained on high-quality, unbiased datasets can reduce the occurrence of hallucinations.

-

Feedback Mechanisms: Systems that allow users to flag incorrect AI outputs provide necessary feedback loops for improvement.

-

Regulatory Frameworks: As AI continues to permeate various facets of our lives, robust regulatory frameworks are crucial to managing its application and safeguarding against misuses and errors.

Call to Action

As AI technologies develop further, balancing innovation with ethical considerations will be vital. This includes rigorous testing of AI systems, transparency in AI-generated content, and ongoing education on the limitations of AI-derived information. Ensuring that AI serves humanity constructively requires vigilance against the dangers of misinformation and proactive measures to curtail its spread.